What is explainable AI, or XAI?

What is explainable AI, or XAI?

Explainable AI is a set of processes and methods that allows users to understand and trust the results and output created by AI’s machine learning (ML) algorithms. The explanations accompanying AI/ML output may target users, operators, or developers and are intended to address concerns and challenges ranging from user adoption to governance and systems development. This “explainability” is core to AI’s ability to garner the trust and confidence needed in the marketplace to spur broad AI adoption and benefit. Other related and emerging initiatives include trustworthy AI and responsible AI.

How is explainable AI implemented?

The U.S. National Institute of Standards and Technology (NIST) states that four principles drive XAI:

- Explanation: Systems deliver accompanying evidence or reason(s) for all outputs.

- Meaningful: Systems provide explanations that are understandable to individual users.

- Explanation accuracy: The explanation correctly reflects the system’s process for generating the output.

- Knowledge limits: The system operates only under conditions for which it was designed or when its output has achieved sufficient confidence levels.

NIST notes that explanations may range from simple to complex and that they depend upon the consumer in question. The agency illustrates some explanation types using the following five non-exhaustive sample explainability categories:

- User benefit

- Societal acceptance

- Regulatory and compliance

- System development

- Owner benefit

Why is explainable AI important?

Explainable AI is a crucial component for growing, winning, and maintaining trust in automated systems. Without trust, AI—and, specifically, AI for IT operations (AIOps)—won’t be fully embraced, leaving the scale and complexity of modern systems to outpace what’s achievable with manual operations and traditional automation.

When trust is established, the practice of “AI washing”—implying that a product or service is AI-driven when AI’s role is tenuous or absent—becomes apparent, helping both practitioners and customers with their AI due diligence. Establishing trust and confidence in AI impacts its adoption scope and speed, which in turn determines how quickly and widely its benefits can be realized.

When tasking any system to find answers or make decisions, especially those with real-world impacts, it’s imperative that we can explain how a system arrives at a decision, how it influences an outcome, or why actions were deemed necessary.

Benefits of explainable AI

The benefits of explainable AI are multidimensional. They relate to informed decision-making, risk reduction, increased confidence and user adoption, better governance, more rapid system improvement, and the overall evolution and utility of AI in the world.

What problem(s) does explainable AI solve?

Many AI and ML models are opaque and their outputs unexplainable. The capacity to expose and explain why certain paths were followed or how outputs were generated is pivotal to the trust, evolution, and adoption of AI technologies.

Shining a light on the data, models, and processes allows operators and users to gain insight and observability into these systems for optimization using transparent and valid reasoning. Most importantly, explainability enables any flaws, biases, and risks to be more easily communicated and subsequently mitigated or removed.

How explainable AI creates transparency and builds trust

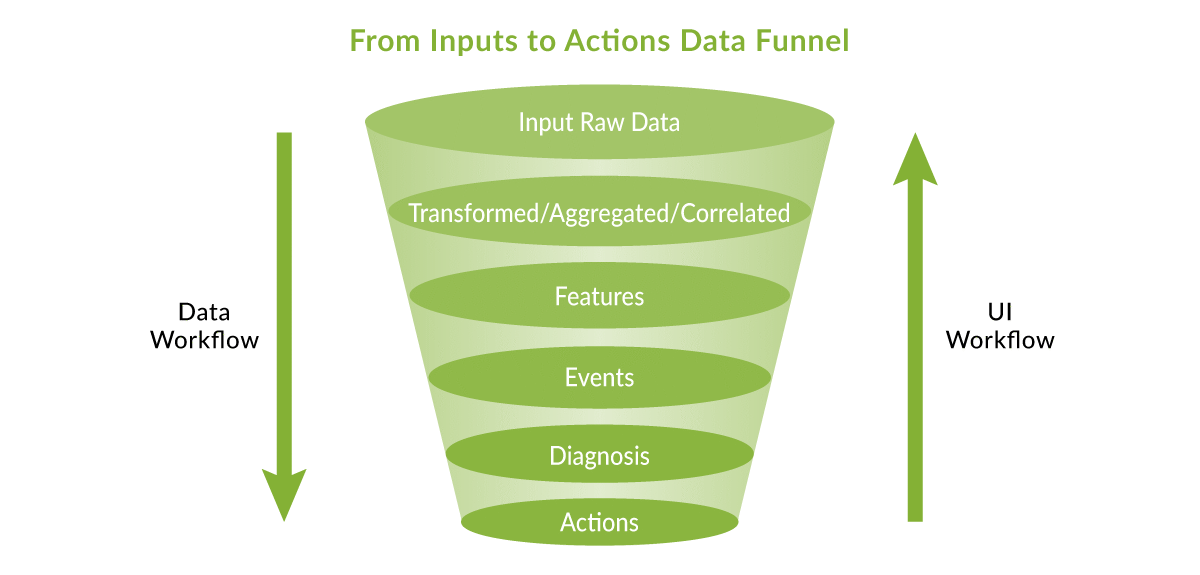

To be useful, initial raw data must eventually result in either a suggested or executed action. Asking a user to trust a wholly autonomous workflow from the outset is often too much of a leap, so it’s advised to allow a user to step through supporting layers from the bottom up. By delving back into events tier by tier, the user interface (UI) workflow allows you to peel back the layers all the way to raw inputs. This facilitates transparency and trust.

A framework that allows domain experts to satisfy their skepticism by digging deeper while also enabling a novice to search as far as their curiosity goes enables both beginners and seasoned veterans to establish trust as they increase their productivity and learning. This engagement also forms a virtuous cycle that can further train and hone AI/ML algorithms for continuous system improvements.

The flow of data in an AI-driven user interface

How to use explainable AI to evaluate and reduce risk

Data networking, with its well-defined protocols and data structures, means AI can make incredible headway without fear of discrimination or human bias. When tasked with neutral problem spaces such as troubleshooting and service assurance, applications of AI can be well-bounded and responsibly embraced.

It’s vital to have some basic technical and operational questions answered by your vendor to help unmask and avoid AI washing. As with any due diligence and procurement efforts, the level of detail in the answers can provide important insights. Responses may require some technical interpretation but are still recommended to help ensure that claims by vendors are viable.

As with any technology, engineering and leadership teams set criteria to evaluate proposed purchases, and related decisions are based on evidence. To reduce risk and aid with due diligence, a few sample questions for AI/ML owners and users to ask are outlined below:

- What algorithms comprise and contribute to the solution?

- What data is ingested, and how is it cleaned?

- Where is the data sourced (and is it customized per tenancy, account, or user)?

- How are parameters and features engineered from the network space?

- How are models trained, re-trained, and kept fresh and relevant?

- Can the system itself explain its reasoning, recommendations, or actions?

- How is bias eliminated or reduced?

- How does the solution or platform improve and evolve automatically?

Additionally, pilots or trials are always recommended to validate the promises or claims made about AI services or systems.

.

Explainable AI in action at Juniper

The responsible and ethical use of AI is a complex topic but one that organizations must address. Juniper Mist AI Innovation Principles guide our use of AI in our services and products. We have also written extensively about AI/ML and our AIOps approach, including AI data and primitives, AI-driven problem solving, interfaces, and intelligent chatbots, all of which help detect and correct network anomalies while improving operations using a better set of tools.

XAI can come in many forms. For example, Juniper AIOps capabilities include performing automatic radio resource management (RRM) in Wi-Fi networks and detecting issues, such as a faulty network cable. Some Juniper XAI tools are available from the Mist product interface, which you can demo in our self-service tour. Sign up here to get access today.

From a user and operator perspective, be on the lookout for a range of new features in products based on our Mist AI™ engine and Marvis Virtual Network Assistant that will showcase greater explainability around the methods, models, decisions, and confidence levels to increase trust and transparency.

Explainable AI FAQs

What is meant by explainable AI?

Explainable AI is a set of processes and methods that allow users to understand and trust the results and output created by AI/ML algorithms. The explanations accompanying AI/ML output may target users, operators, or developers and are intended to address concerns and challenges ranging from user adoption to governance and systems development.

What is an explainable AI model?

An explainable AI model is one with characteristics or properties that facilitate transparency, ease of understanding, and an ability to question or query AI outputs.

Why is explainable AI important?

Because explainable AI details the rationale for an AI system’s outputs, it enables the understanding, governance, and trust that people must have to deploy AI systems and have confidence in their outputs and outcomes. Without XAI to help build trust and confidence, people are unlikely to broadly deploy or benefit from the technology.

What are the benefits of explainable AI?

There are many benefits to explainable AI. They relate to informed decision-making, reduced risk, increased AI confidence and adoption, better governance, more rapid system improvement, and the overall evolution and utility of AI in the world.

Does explainable AI exist?

Yes, though it’s in a nascent form due to still-evolving definitions. While it’s more difficult to implement XAI on complex or blended AI/ML models with a large number of features or phases, XAI is quickly finding its way into products and services to build trust with users and to help expedite development.

What is explainability in deep learning?

Deep learning is sometimes considered a “black box,” which means that it can be difficult to understand the behavior of the deep-learning model and how it reaches its decisions. Explainability seeks to facilitate deep-learning explanations. One technique used to explain deep learning models is Shapley. SHAP values can explain specific predictions by highlighting features involved in the prediction. There is ongoing research in evaluating different explanation methods.

What explainable AI features does Juniper offer?

XAI can come in many forms. For example, Juniper offers blogs and videos that describe the ML algorithms used in several AIOps capabilities such as performing automatic radio resource management (RRM) in Wi-Fi networks or detecting a faulty network cable (see the VIDEO resources below). The Marvis Application Experience Insights dashboard uses SHAP values to identify the network conditions (features) that are causing poor application experiences such as a choppy Zoom video. Some of these XAI tools are available from the Mist product interface, which you can demo in our self-service tour. Sign up here to get access today.